Top trends in big data for 2024 and beyond

Big data is driving changes in how organizations process, store and analyze data. The benefits are spurring even more innovation. Here are four big trends.

Big data is proving its value to organizations of all types and sizes in a wide range of industries. Enterprises that make advanced use of it are realizing tangible business benefits, from improved efficiency in operations and increased visibility into rapidly changing business environments to the optimization of products and services for customers.

The result is that as organizations find uses for these typically large stores of data, big data technologies, practices and approaches are evolving. New types of big data architectures and techniques for collecting, processing, managing and analyzing the gamut of data across an organization continue to emerge.

Dealing with big data is more than just dealing with large volumes of stored information. Volume is just one of the many V's of big data that organizations need to address. There usually is also a significant variety of data -- from structured information sitting in databases distributed throughout the organization to vast quantities of unstructured and semistructured data residing in files, images, videos, sensors, system logs, text and documents, including paper ones that are waiting to be digitized. In addition, this information often is created and changed at a rapid rate (velocity) and has varying levels of data quality (veracity), creating further challenges on data management, processing and analysis.

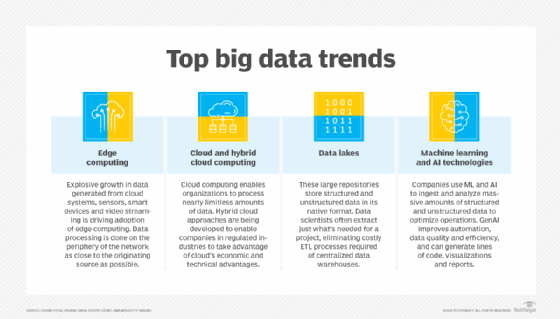

Four major trends in big data, identified by industry experts, are helping organizations meet those challenges and get the benefits they're seeking. Here's a look at the trends and what they mean for organizations that are investing in big data deployments.

1. Generative AI, advanced analytics and machine learning continue to evolve

With the vast amount of data being generated, traditional analytics approaches are challenged because they're not easily automated for data analysis at scale. Distributed processing technologies, especially those promoted by open source platforms such as Hadoop and Spark, enable organizations to process petabytes of information at rapid speed. Enterprises are then using big data analytics technologies to optimize their business intelligence and analytics initiatives, moving past slow reporting tools dependent on data warehouse technology to more intelligent, responsive applications that enable greater visibility into customer behavior, business processes and overall operations.

Big data analytics evolutions continue to focus around machine learning and AI systems. Increasingly, AI is used by organizations of all sizes to optimize and improve their business processes. In the Enterprise Strategy Group spending intentions survey, 63% of the 193 respondents familiar with AI and machine learning initiatives in their organization said they expected it to spend more on those tools in 2023.

Machine learning enables organizations to identify data patterns more easily, detect anomalies in large data sets, and to support predictive analytics and other advanced data analysis capabilities. Some examples of that include the following:

- Recognition systems for image, video and text data.

- Automated classification of data.

- Natural language processing (NLP) capabilities for chatbots and voice and text analysis.

- Autonomous business process automation.

- Personalization and recommendation features in websites and services.

- Analytics systems that can find optimal solutions to business problems among a sea of data.

Indeed, with the help of AI and machine learning, companies are using their big data environments to provide deeper customer support through intelligent chatbots and more personalized interactions without requiring significant increases in customer support staff. These AI-enabled systems are able to collect and analyze vast amounts of information about customers and users, especially when paired with a data lake strategy that can aggregate a wide range of information across many sources.

Enterprises are also seeing innovations in the area of data visualization. People understand the meaning of data better when it's represented in a visualized form, such as charts, graphs and plots. Emerging forms of data visualization are putting the power of AI-enabled analytics into the hands of even casual business users. This helps organizations spot key insights that can improve decision-making. Advanced forms of visualization and analytics tools even let users ask questions in natural language, with the system automatically determining the right query and showing the results in a context-relevant manner.

Generative AI and large language models (LLMs) improve an organization's data operations even more with benefits across the entire data pipeline. Generative AI can help automate data observability monitoring functions, improve quality and efficiency with proactive alerts and fixes for identified issues, and even write lines of code. It can scan large sets of data for errors or inconsistencies or identify patterns and generate reports or visualizations of the most important details for data teams. LLMs provide new data democratization capabilities to organizations. Data cataloging, integration, privacy, governance and sharing are all on the rise as generative AI weaves itself into data management processes.

The power of Generative AI and LLMs is dependent on the quality of the data used to train the model. Data quality is more important than ever as the interest and use of generative AI continues to rise in all industries. Data teams must carefully monitor the results of all AI-generated data operations. Incorrect or misguided data can lead to wrong decisions and costly outcomes.

2. More data, increased data diversity drive advances in processing and the rise of edge computing

The pace of data generation continues to accelerate. Much of this data isn't generated from the business transactions that happen in databases -- instead, it comes from other sources, including cloud systems, web applications, video streaming and smart devices such as smartphones and voice assistants. This data is largely unstructured and in the past was left mostly unprocessed and unused by organizations, turning it into so-called dark data.

That brings us to the biggest trend in big data: Non-database sources will continue to be the dominant generators of data, in turn forcing organizations to reexamine their needs for data processing. Voice assistants and IoT devices, in particular, are driving a rapid ramp-up in big data management needs across industries as diverse as retail, healthcare, finance, insurance, manufacturing and energy and in a wide range of public-sector markets. This explosion in data diversity is compelling organizations to think beyond the traditional data warehouse as a means for processing all this information.

In addition, the need to handle the data being generated is moving to the devices themselves, as industry breakthroughs in processing power have led to the development of increasingly advanced devices capable of collecting and storing data on their own without taxing network, storage and computing infrastructure. For example, mobile banking apps can handle many tasks for remote check deposit and processing without having to send images back and forth to central banking systems for processing.

The use of devices for distributed processing is embodied in the concept of edge computing, which shifts the processing load to the devices themselves before the data is sent to the servers. Edge computing optimizes performance and storage by reducing the need for data to flow through networks. That lowers computing and processing costs, especially cloud storage, bandwidth and processing expenses. Edge computing also helps to speed up data analysis and provides faster responses to the user.

3. Big data storage needs spur innovations in cloud and hybrid cloud platforms, growth of data lakes

To deal with the inexorable increase in data generation, organizations are spending more of their resources storing this data in a range of cloud-based and hybrid cloud systems optimized for all the V's of big data. In previous decades, organizations handled their own storage infrastructure, resulting in massive data centers that enterprises had to manage, secure and operate. The move to cloud computing changed that dynamic. By shifting the responsibility to cloud infrastructure providers -- such as AWS, Google, Microsoft, Oracle and IBM -- organizations can deal with almost limitless amounts of new data and pay for storage and compute capability on demand without having to maintain their own large and complex data centers.

Some industries are challenged in their use of cloud infrastructure due to regulatory or technical limitations. For example, heavily regulated industries -- such as healthcare, financial services and government -- have restrictions that prevent the use of public cloud infrastructure. As a result, over the past decade, cloud providers have developed ways to provide more regulatory-friendly infrastructure, as well as hybrid approaches that combine aspects of third-party cloud systems with on-premises computing and storage to meet critical infrastructure needs. The evolution of both public cloud and hybrid cloud infrastructures will no doubt progress as organizations seek the economic and technical advantages of cloud computing.

In addition to innovations in cloud storage and processing, enterprises are shifting toward new data architecture approaches that allow them to handle the variety, veracity and volume challenges of big data. Rather than trying to centralize data storage in a data warehouse that requires complex and time-intensive extract, transform and load processes, enterprises are evolving the concept of the data lake. Data lakes store structured, semistructured and unstructured data sets in their native format. This approach shifts the responsibility for data transformation and preparation to end users who have different data needs. The data lake can also provide shared services for data analysis and processing.

4. DataOps and data stewardship move to the fore

Many aspects of big data processing, storage and management will see continued evolution for years to come. Much of this innovation is driven by technology needs, but also partly by changes in the way we think about and relate to data.

One area of innovation is the emergence of DataOps, a methodology and practice that focuses on agile, iterative approaches for dealing with the full lifecycle of data as it flows through the organization. Rather than thinking about data in piecemeal fashion with separate people dealing with data generation, storage, transportation, processing and management, DataOps processes and frameworks address organizational needs across the data lifecycle from generation to archiving.

Likewise, organizations are increasingly dealing with data governance, privacy and security issues, a situation that is exacerbated by big data environments. In the past, enterprises often were somewhat lax about concerns around data privacy and governance, but new regulations make them much more liable for what happens to personal information in their systems. Generative AI adds another layer of privacy and ethics concerns for organizations to consider.

Due to widespread security breaches, eroding customer trust in enterprise data-sharing practices, and challenges in managing data over its lifecycle, organizations are becoming more focused on data stewardship and working harder to properly secure and manage data, especially as it crosses international boundaries. New tools are emerging to make sure that data stays where it needs to stay, is secured at rest and in motion, and is appropriately tracked over its lifecycle.

Collectively, these big data trends will continue to shape the big data shape in 2024.

Editor's note: Trends were identified by industry experts and research. This article was written in 2021. TechTarget editors revised it in 2024 to improve the reader experience.