DataOps

What is DataOps?

DataOps is an Agile approach to designing, implementing and maintaining a distributed data architecture that will support a wide range of open source tools and frameworks in production. The goal of DataOps is to create business value from big data.

Inspired by the DevOps movement, the DataOps strategy strives to speed the production of applications running on big data processing frameworks. DataOps also seeks to liberate silos across IT operations, data management and software development teams, encouraging line-of-business stakeholders to work with data engineers, data scientists and analysts. The goal is to ensure the organization's data can be used in the most flexible, effective manner possible to achieve positive and reliable business outcomes.

Since it incorporates so many elements from the data lifecycle, DataOps spans a number of information technology disciplines, including data development, data transformation, data extraction, data quality, data governance, data access control, data center capacity planning and system operations. DataOps teams are often managed by an organization's chief data scientist or chief analytics officer and supported by data engineers, data analysts, data stewards and others with responsibilities for data.

As with DevOps, there are no DataOps-specific software tools -- only frameworks and related tool sets that support a DataOps approach to collaboration and increased agility. These tools include ETL/ELT tools, data curation and cataloging tools, log analyzers and systems monitors. Software that supports microservices architectures, as well as open source software that lets applications blend structured and unstructured data, are also associated with the DataOps movement. This software can include MapReduce, HDFS, Kafka, Hive and Spark.

How DataOps works

The goal of DataOps is to combine DevOps and Agile methodologies to manage data in alignment with business goals. If the goal is to raise the lead conversion rate, for example, DataOps would position data to make recommendations for marketing products better, thus converting more leads. Agile processes are used for data governance and analytics development while DevOps processes are used to optimize code, product builds and delivery.

Building new code is only one part of DataOps. Streamlining and improving the data warehouse are equally as important. Similar to the process of lean manufacturing, DataOps uses statistical process control (SPC) to monitor and verify the data analytics pipeline consistently. SPC ensures statistics remain within feasible ranges, advances data processing efficiency and raises data quality. If an anomaly or error occurs, SPC helps alert data analysts immediately for a response.

How to implement DataOps

The volume of data is estimated to continue to grow exponentially, making implementation of a DataOps strategy critical. The first step to DataOps involves cleaning raw data and developing an infrastructure that makes it readily available for use, typically in a self-service model. Once data is made accessible, software, platforms and tools should be developed or deployed that orchestrate data and integrate with current systems. These components will then continuously process new data, monitor performance and produce real-time insights.

A few best practices associated with implementing a DataOps strategy include the following:

- Establish progress benchmarks and performance measurements at every stage of the data lifecycle.

- Define semantic rules for data and metadata early on.

- Incorporate feedback loops to validate the data.

- Use data science tools and business intelligence data platforms to automate as much of the process as possible.

- Optimize processes for dealing with bottlenecks and data silos, typically involving software automation of some sort.

- Design for growth, evolution and scalability.

- Use disposable environments that mimic the real production environment for experimentation.

- Create a DataOps team with a variety of technical skills and backgrounds.

- Treat DataOps as lean manufacturing by focusing on continuous improvements to efficiency.

Benefits of DataOps

Transitioning to a DataOps strategy can bring an organization the following benefits:

- Provides more trustworthy real-time data insights.

- Reduces the cycle time of data science applications.

- Enables better communication and collaboration among teams and team members.

- Increases transparency by using data analysis to predict all possible scenarios.

- Builds processes to be reproducible and to reuse code whenever possible.

- Ensures better quality data.

- Creates a unified, interoperable data hub.

What a DataOps framework includes

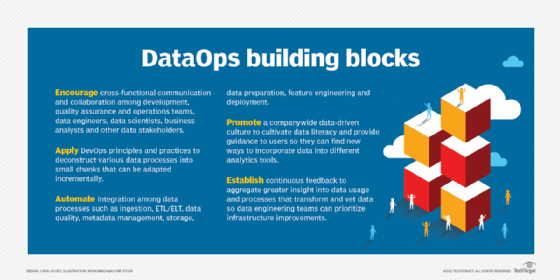

A DataOps framework needs to harmonize and improve on several key elements and practices.

Cross-functional communication. DataOps starts with the same core paradigm for Agile development practices that support improved collaboration across business, development, quality assurance and operations teams and extends this collaboration to data engineers, data scientists and business analysts.

Agile mindset. It's essential to find ways to break various data processes into small chunks that can be adapted incrementally -- analogous to continuous development and continuous integration pipelines.

Integrated data pipeline. Enterprises need to automate the common handoffs between data processes such as ingestion, ETL/ELT, data quality, metadata management, storage, data preparation, feature engineering and deployment.

Data-driven culture. Enterprises should adopt a long-term and ongoing program for cultivating data literacy across the organization and guiding data users who are finding new ways to incorporate data into different analytics tools.

Continuous feedback. Various teams also need to develop a process to aggregate insights for transforming and vetting data to help data engineering teams prioritize infrastructure improvements.

DataOps tools and vendors

DataOps tools address many capabilities required to ingest, transform, clean, orchestrate and load data. In some cases, these tools emerged to complement the vendor's other tools. In other cases, vendors focus on specific DataOps workflows. Popular DataOps tools include the following:

- Ascend.io helps ingest, transform and orchestrate data engineering and analytics workloads.

- AtIan provides collaboration and orchestration capabilities to automate DataOps workloads.

- Composable Analytics' platform includes tools for creating composable data pipelines.

- DataKitchen's platform provides DataOps observability and automation software.

- Delphix's platform masks and secures data using data virtualization.

- Devo's platform automates data onboarding and governance.

- Informatica has extended its data catalog tools to support DataOps capabilities.

- Infoworks software helps migrate data, metadata and workloads to the cloud.

- Kinaesis tools analyze, optimize and govern data infrastructure.

- Landoop/Lenses helps build data pipelines on Kubernetes.

- Nexla software provides data engineering automation to create and manage data products.

- Okera's platform helps provision, secure and govern sensitive data at scale.

- Qlik-Attunity's integration platform complements Qlik's visualization and analytics tools.

- Qubole's platform centralizes DataOps on top of a secure data lake for machine learning, AI and analytics.

- Software AG StreamSets helps create and manage data pipelines in cloud-based environments.

- Tamr has extended its data catalog tools to help streamline data workflows.

DataOps trends and future outlook

Several trends are driving the future of DataOps, including integration, augmentation and observability.

Increased integration with other data disciplines. DataOps will increasingly need to interoperate and support related data management practices. Gartner has identified MLOps, ModelOps and PlatformOps as complementary approaches to manage specific ways of using data. MLOps is geared to machine learning development and versioning, and ModelOps focuses on model engineering, training, experimentation and monitoring. Gartner characterizes PlatformOps as a comprehensive AI orchestration platform that includes DataOps, MLOps, ModelOps and DevOps.

Augmented DataOps. AI is beginning to help manage and orchestrate the data infrastructure itself. Data catalogs are evolving into augmented data catalogs and analytics into augmented analytics infused with AI. Similar techniques will be gradually applied to all other aspects of the DataOps pipeline.

Data observability. The DevOps community for years has widely used application performance management tools empowered by observability infrastructure to help pinpoint and prioritize issues with applications. Vendors like Acceldata, Monte Carlo, Precisely, Soda and Unravel are developing comparable data observability tools focused on the data infrastructure itself. DataOps tools will increasingly consume data observability feeds to help optimize DataOps pipelines through development, integration, partnerships and acquisitions.