What is machine learning? Guide, definition and examples

Machine learning is a branch of AI focused on building computer systems that learn from data. The breadth of ML techniques enables software applications to improve their performance over time.

ML algorithms are trained to find relationships and patterns in data. Using historical data as input, these algorithms can make predictions, classify information, cluster data points, reduce dimensionality and even generate new content. Examples of the latter, known as generative AI, include OpenAI's ChatGPT, Anthropic's Claude and GitHub Copilot.

Machine learning is widely applicable across many industries. For example, e-commerce, social media and news organizations use recommendation engines to suggest content based on a customer's past behavior. In self-driving cars, ML algorithms and computer vision play a critical role in safe road navigation. In healthcare, ML can aid in diagnosis and suggest treatment plans. Other common ML use cases include fraud detection, spam filtering, malware threat detection, predictive maintenance and business process automation.

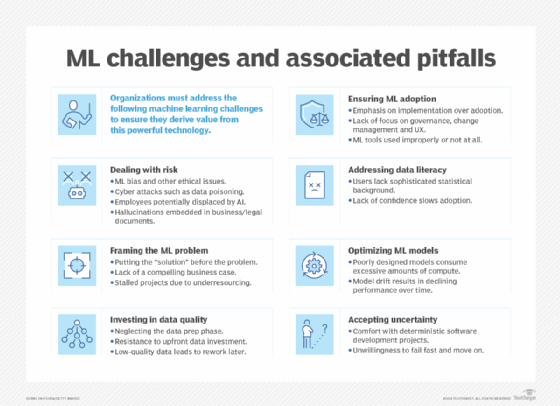

While ML is a powerful tool for solving problems, improving business operations and automating tasks, it's also complex and resource-intensive, requiring deep expertise and significant data and infrastructure. Choosing the right algorithm for a task calls for a strong grasp of mathematics and statistics. Training ML algorithms often demands large amounts of high-quality data to produce accurate results. The results themselves, particularly those from complex algorithms such as deep neural networks, can be difficult to understand. And ML models can be costly to run and fine-tune.

Still, most organizations are embracing machine learning, either directly or through ML-infused products. According to a 2024 report from Rackspace Technology, AI spending in 2024 is expected to more than double compared with 2023, and 86% of companies surveyed reported seeing gains from AI adoption. Companies reported using the technology to enhance customer experience (53%), innovate in product design (49%) and support human resources (47%), among other applications.

TechTarget's guide to machine learning serves as a primer on this important field, explaining what machine learning is, how to implement it and its business applications. You'll find information on the various types of ML algorithms, challenges and best practices associated with developing and deploying ML models, and what the future holds for machine learning. Throughout the guide, there are hyperlinks to related articles that cover these topics in greater depth.

Why is machine learning important?

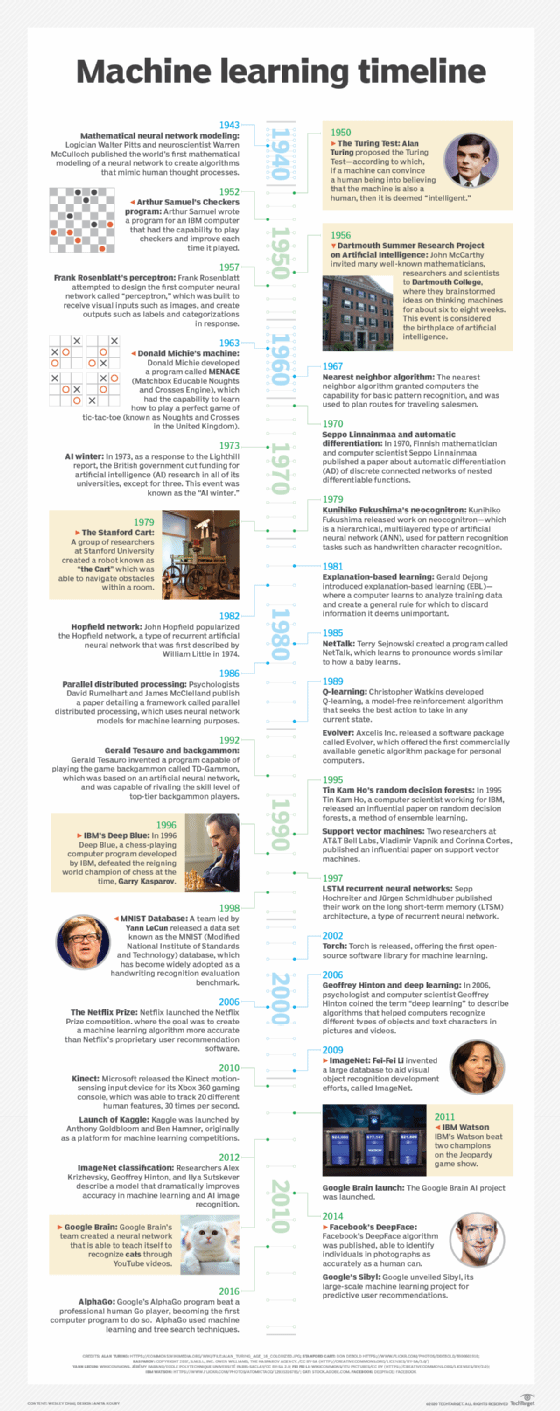

ML has played an increasingly important role in human society since its beginnings in the mid-20th century, when AI pioneers like Walter Pitts, Warren McCulloch, Alan Turing and John von Neumann laid the field's computational groundwork. Training machines to learn from data and improve over time has enabled organizations to automate routine tasks -- which, in theory, frees humans to pursue more creative and strategic work.

Machine learning has extensive and diverse practical applications. In finance, ML algorithms help banks detect fraudulent transactions by analyzing vast amounts of data in real time at a speed and accuracy humans cannot match. In healthcare, ML assists doctors in diagnosing diseases based on medical images and informs treatment plans with predictive models of patient outcomes. And in retail, many companies use ML to personalize shopping experiences, predict inventory needs and optimize supply chains.

ML also performs manual tasks that are beyond human ability to execute at scale -- for example, processing the huge quantities of data generated daily by digital devices. This ability to extract patterns and insights from vast data sets has become a competitive differentiator in fields like banking and scientific discovery. Many of today's leading companies, including Meta, Google and Uber, integrate ML into their operations to inform decision-making and improve efficiency.

Machine learning is necessary to make sense of the ever-growing volume of data generated by modern societies. The abundance of data humans create can also be used to further train and fine-tune ML models, accelerating advances in ML. This continuous learning loop underpins today's most advanced AI systems, with profound implications.

Philosophically, the prospect of machines processing vast amounts of data challenges humans' understanding of our intelligence and our role in interpreting and acting on complex information. Practically, it raises important ethical considerations about the decisions made by advanced ML models. Transparency and explainability in ML training and decision-making, as well as these models' effects on employment and societal structures, are areas for ongoing oversight and discussion.

What are the different types of machine learning?

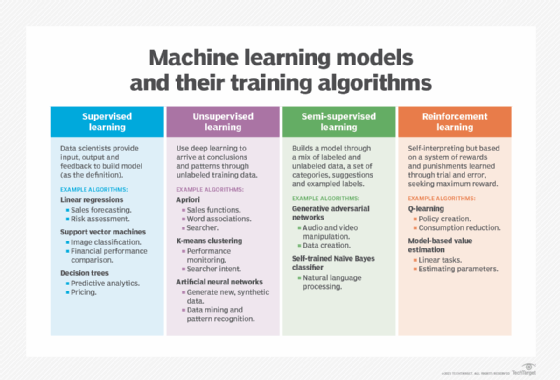

Classical ML is often categorized by how an algorithm learns to become more accurate in its predictions. The four basic types of ML are:

- supervised learning

- unsupervised learning

- semisupervised learning

- reinforcement learning.

The choice of algorithm depends on the nature of the data. Many algorithms and techniques aren't limited to a single type of ML; they can be adapted to multiple types depending on the problem and data set. For instance, deep learning algorithms such as convolutional and recurrent neural networks are used in supervised, unsupervised and reinforcement learning tasks, based on the specific problem and data availability.

Machine learning vs. deep learning neural networks

Deep learning is a subfield of ML that focuses on models with multiple levels of neural networks, known as deep neural networks. These models can automatically learn and extract hierarchical features from data, making them effective for tasks such as image and speech recognition.

How does supervised machine learning work?

Supervised learning supplies algorithms with labeled training data and defines which variables the algorithm should assess for correlations. Both the input and output of the algorithm are specified. Initially, most ML algorithms used supervised learning, but unsupervised approaches are gaining popularity.

Supervised learning algorithms are used for numerous tasks, including the following:

- Binary classification. This divides data into two categories.

- Multiclass classification. This chooses among more than two categories.

- Ensemble modeling. This combines the predictions of multiple ML models to produce a more accurate prediction.

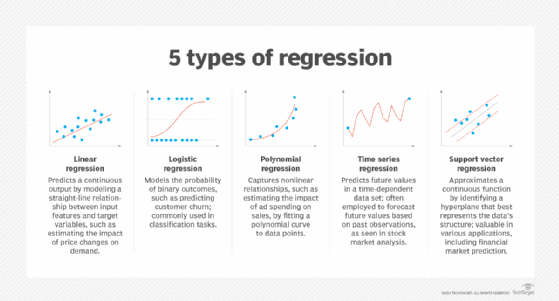

- Regression modeling. This predicts continuous values based on relationships within data.

How does unsupervised machine learning work?

Unsupervised learning doesn't require labeled data. Instead, these algorithms analyze unlabeled data to identify patterns and group data points into subsets using techniques such as gradient descent. Most types of deep learning, including neural networks, are unsupervised algorithms.

Unsupervised learning is effective for various tasks, including the following:

- Splitting the data set into groups based on similarity using clustering algorithms.

- Identifying unusual data points in a data set using anomaly detection algorithms.

- Discovering sets of items in a data set that frequently occur together using association rule mining.

- Decreasing the number of variables in a data set using dimensionality reduction techniques.

How does semisupervised learning work?

Semisupervised learning provides an algorithm with only a small amount of labeled training data. From this data, the algorithm learns the dimensions of the data set, which it can then apply to new, unlabeled data. Note, however, that providing too little training data can lead to overfitting, where the model simply memorizes the training data rather than truly learning the underlying patterns.

Although algorithms typically perform better when they train on labeled data sets, labeling can be time-consuming and expensive. Semisupervised learning combines elements of supervised learning and unsupervised learning, striking a balance between the former's superior performance and the latter's efficiency.

Semisupervised learning can be used in the following areas, among others:

- Machine translation. Algorithms can learn to translate language based on less than a full dictionary of words.

- Fraud detection. Algorithms can learn to identify cases of fraud with only a few positive examples.

- Labeling data. Algorithms trained on small data sets can learn to automatically apply data labels to larger sets.

How does reinforcement learning work?

Reinforcement learning involves programming an algorithm with a distinct goal and a set of rules to follow in achieving that goal. The algorithm seeks positive rewards for performing actions that move it closer to its goal and avoids punishments for performing actions that move it further from the goal.

Reinforcement learning is often used for tasks such as the following:

- Helping robots learn to perform tasks in the physical world.

- Teaching bots to play video games.

- Helping enterprises plan allocation of resources.

How to choose and build the right machine learning model

Developing the right ML model to solve a problem requires diligence, experimentation and creativity. Although the process can be complex, it can be summarized into a seven-step plan for building an ML model.

1. Understand the business problem and define success criteria. Convert the group's knowledge of the business problem and project objectives into a suitable ML problem definition. Consider why the project requires machine learning, the best type of algorithm for the problem, any requirements for transparency and bias reduction, and expected inputs and outputs.

2. Understand and identify data needs. Determine what data is necessary to build the model and assess its readiness for model ingestion. Consider how much data is needed, how it will be split into test and training sets, and whether a pretrained ML model can be used.

3. Collect and prepare the data for model training. Clean and label the data, including replacing incorrect or missing data, reducing noise and removing ambiguity. This stage can also include enhancing and augmenting data and anonymizing personal data, depending on the data set. Finally, split the data into training, test and validation sets.

4. Determine the model's features and train it. Start by selecting the appropriate algorithms and techniques, including setting hyperparameters. Next, train and validate the model, then optimize it as needed by adjusting hyperparameters and weights. Depending on the business problem, algorithms might include natural language understanding capabilities, such as recurrent neural networks or transformers for natural language processing (NLP) tasks, or boosting algorithms to optimize decision tree models.

5. Evaluate the model's performance and establish benchmarks. Perform confusion matrix calculations, determine business KPIs and ML metrics, measure model quality, and determine whether the model meets business goals.

6. Deploy the model and monitor its performance in production. This part of the process, known as operationalizing the model, is typically handled collaboratively by data scientists and machine learning engineers. Continuously measure model performance, develop benchmarks for future model iterations and iterate to improve overall performance. Deployment environments can be in the cloud, at the edge or on premises.

7. Continuously refine and adjust the model in production. Even after the ML model is in production and continuously monitored, the job continues. Changes in business needs, technology capabilities and real-world data can introduce new demands and requirements.

Training and optimizing ML models

Learn how the following algorithms and techniques are used in training and optimizing machine learning models:

Machine learning applications for enterprises

Machine learning has become integral to business software. The following are some examples of how various business applications use ML:

- Business intelligence. BI and predictive analytics software uses ML algorithms, including linear regression and logistic regression, to identify significant data points, patterns and anomalies in large data sets. These insights help businesses make data-driven decisions, forecast trends and optimize performance. Advances in generative AI have also enabled the creation of detailed reports and dashboards that summarize complex data in easily understandable formats.

- Customer relationship management. Key ML applications in CRM include analyzing customer data to segment customers, predicting behaviors such as churn, making personalized recommendations, adjusting pricing, optimizing email campaigns, providing chatbot support and detecting fraud. Generative AI can also create tailored marketing content, automate responses in customer service and generate insights based on customer feedback.

- Security and compliance. Support vector machines can distinguish deviations in behavior from a normal baseline, which is crucial for identifying potential cyberthreats, by finding the best line or boundary for dividing data into different groups. Generative adversarial networks can create adversarial examples of malware, helping security teams train ML models that are better at distinguishing between benign and malicious software.

- Human resource information systems. ML models streamline hiring by filtering applications and identifying the best candidates for a position. They can also predict employee turnover, suggest professional development paths and automate interview scheduling. Generative AI can help create job descriptions and generate personalized training materials.

- Supply chain management. Machine learning can optimize inventory levels, streamline logistics, improve supplier selection and proactively address supply chain disruptions. Predictive analytics can forecast demand more accurately, and AI-driven simulations can model different scenarios to improve resilience.

- Natural language processing. NLP applications include sentiment analysis, language translation and text summarization, among others. Advances in generative AI, such as OpenAI's GPT-4 and Google's Gemini, have significantly enhanced these capabilities. Generative NLP models can produce humanlike text, improve virtual assistants and enable more sophisticated language-based applications, including content creation and document summarization.

Machine learning examples by industry

Enterprise adoption of ML techniques across industries is transforming business processes. Here are a few examples:

- Financial services. Capital One uses ML to boost fraud detection, deliver personalized customer experiences and improve business planning. The company is using the MLOps methodology to deploy the ML applications at scale.

- Pharmaceuticals. Drug makers use ML for drug discovery, clinical trials and drug manufacturing. Eli Lilly has built AI and ML models, for example, to find the best sites for clinical trials and boost participant diversity. The models have sharply reduced clinical trial timelines, according to the company.

- Insurance. Progressive Corp.'s well-known Snapshot program uses ML algorithms to analyze driving data, offering lower rates to safe drivers. Other useful applications of ML in insurance include underwriting and claims processing.

- Retail. Walmart has deployed My Assistant, a generative AI tool to help its some 50,000 campus employees with content generation, summarizing large documents and acting as an overall "creative partner." The company is also using the tool to solicit employee feedback on use cases.

What are the advantages and disadvantages of machine learning?

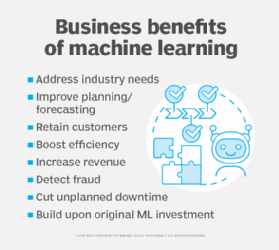

When deployed effectively, ML provides a competitive advantage to businesses by identifying trends and predicting outcomes with higher accuracy than conventional statistics or human intelligence. ML can benefit businesses in several ways:

- Analyzing historical data to retain customers.

- Launching recommender systems to grow revenue.

- Improving planning and forecasting.

- Assessing patterns to detect fraud.

- Boosting efficiency and cutting costs.

But machine learning also entails a number of business challenges. First and foremost, it can be expensive. ML requires costly software, hardware and data management infrastructure, and ML projects are typically driven by data scientists and engineers who command high salaries.

Another significant issue is ML bias. Algorithms trained on data sets that exclude certain populations or contain errors can lead to inaccurate models. These models can fail and, at worst, produce discriminatory outcomes. Basing core enterprise processes on biased models can cause businesses regulatory and reputational harm.

Importance of human-interpretable machine learning

Explaining the internal workings of a specific ML model can be challenging, especially when the model is complex. As machine learning evolves, the importance of explainable, transparent models will only grow, particularly in industries with heavy compliance burdens, such as banking and insurance.

Developing ML models whose outcomes are understandable and explainable by human beings has become a priority due to rapid advances in and adoption of sophisticated ML techniques, such as generative AI. Researchers at AI labs such as Anthropic have made progress in understanding how generative AI models work, drawing on interpretability and explainability techniques.

Interpretable vs. explainable AI

Interpretability focuses on understanding an ML model's inner workings in depth, whereas explainability involves describing the model's decision-making in an understandable way. Interpretable ML techniques are typically used by data scientists and other ML practitioners, where explainability is more often intended to help non-experts understand machine learning models. A so-called black box model might still be explainable even if it is not interpretable, for example. Researchers could test different inputs and observe the subsequent changes in outputs, using methods such as Shapley additive explanations (SHAP) to see which factors most influence the output. In this way, researchers can arrive at a clear picture of how the model makes decisions (explainability), even if they do not fully understand the mechanics of the complex neural network inside (interpretability).

Interpretable ML techniques aim to make a model's decision-making process clearer and more transparent. Examples include decision trees, which provide a visual representation of decision paths; linear regression, which explains predictions based on weighted sums of input features; and Bayesian networks, which represent dependencies among variables in a structured and interpretable way.

Explainable AI (XAI) techniques are used after the fact to make the output of more complex ML models more comprehensible to human observers. Examples include local interpretable model-agnostic explanations (LIME), which approximate the model's behavior locally with simpler models to explain individual predictions, and SHAP values, which assign importance scores to each feature to clarify how they contribute to the model's decision.

Transparency requirements can dictate ML model choice

In some industries, data scientists must use simple ML models because it's important for the business to explain how every decision was made. This need for transparency often results in a tradeoff between simplicity and accuracy. Although complex models can produce highly accurate predictions, explaining their outputs to a layperson -- or even an expert -- can be difficult.

Simpler, more interpretable models are often preferred in highly regulated industries where decisions must be justified and audited. But advances in interpretability and XAI techniques are making it increasingly feasible to deploy complex models while maintaining the transparency necessary for compliance and trust.

Machine learning teams, roles and workflows

Building an ML team starts with defining the goals and scope of the ML project. Essential questions to ask include: What business problems does the ML team need to solve? What are the team's objectives? What metrics will be used to assess performance?

Answering these questions is an essential part of planning a machine learning project. It helps the organization understand the project's focus (e.g., research, product development, data analysis) and the types of ML expertise required (e.g., computer vision, NLP, predictive modeling).

Next, based on these considerations and budget constraints, organizations must decide what job roles will be necessary for the ML team. The project budget should include not just standard HR costs, such as salaries, benefits and onboarding, but also ML tools, infrastructure and training. While the specific composition of an ML team will vary, most enterprise ML teams will include a mix of technical and business professionals, each contributing an area of expertise to the project.

ML team roles

An ML team typically includes some non-ML roles, such as domain experts who help interpret data and ensure relevance to the project's field, project managers who oversee the machine learning project lifecycle, product managers who plan the development of ML applications and software, and software engineers who build those applications.

In addition, several more narrowly ML-focused roles are essential for an ML team:

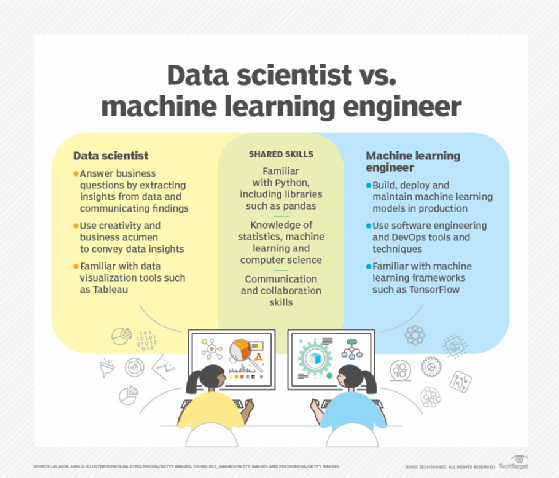

- Data scientist. Data scientists design experiments and build models to predict outcomes and identify patterns. They collect and analyze data sets, clean and preprocess data, design model architectures, interpret model outcomes and communicate findings to business leaders and stakeholders. Data scientists need expertise in statistics, computer programming and machine learning, including popular languages like Python and R and frameworks such as PyTorch and TensorFlow.

- Data engineer. Data engineers are responsible for the infrastructure supporting ML projects, ensuring that data is collected, processed and stored in an accessible way. They design, build and maintain data pipelines; manage large-scale data processing systems; and create and optimize data integration processes. They need expertise in database management, data warehousing, programming languages such as SQL and Scala, and big data technologies like Hadoop and Apache Spark.

- ML engineer. Also known as MLOps engineers, ML engineers help bring the models developed by data scientists into production environments by using the ML pipelines maintained by data engineers. They optimize algorithms for performance; deploy and monitor ML models; maintain and scale ML infrastructure; and automate the ML lifecycle through practices such as CI/CD and data versioning. In addition to knowledge of machine learning and AI, ML engineers typically need expertise in software engineering, data architecture and cloud computing.

Steps for establishing ML workflows

Once the ML team is formed, it's important that everything runs smoothly. Ensure that team members can easily share knowledge and resources to establish consistent workflows and best practices. For example, implement tools for collaboration, version control and project management, such as Git and Jira.

Clear and thorough documentation is also important for debugging, knowledge transfer and maintainability. For ML projects, this includes documenting data sets, model runs and code, with detailed descriptions of data sources, preprocessing steps, model architectures, hyperparameters and experiment results.

A common methodology for managing ML projects is MLOps, short for machine learning operations: a set of practices for deploying, monitoring and maintaining ML models in production. It draws inspiration from DevOps but accounts for the nuances that differentiate ML from software engineering. Just as DevOps improves collaboration between software developers and IT operations, MLOps connects data scientists and ML engineers with development and operations teams.

By adopting MLOps, organizations aim to improve consistency, reproducibility and collaboration in ML workflows. This involves tracking experiments, managing model versions and keeping detailed logs of data and model changes. Keeping records of model versions, data sources and parameter settings ensures that ML project teams can easily track changes and understand how different variables affect model performance.

Similarly, standardized workflows and automation of repetitive tasks reduce the time and effort involved in moving models from development to production. This includes automating model training, testing and deployment. After deploying, continuous monitoring and logging ensure that models are always updated with the latest data and performing optimally.

Careers in machine learning and AI

The global AI market's value is expected to reach nearly $2 trillion by 2030, and the need for skilled AI professionals is growing in kind. Check out the following articles related to ML and AI professional development:

Machine learning tools and platforms

ML development relies on a range of platforms, software frameworks, code libraries and programming languages. Here's an overview of each category and some of the top tools in that category.

Platforms

ML platforms are integrated environments that provide tools and infrastructure to support the ML model lifecycle. Key functionalities include data management; model development, training, validation and deployment; and postdeployment monitoring and management. Many platforms also include features for improving collaboration, compliance and security, as well as automated machine learning (AutoML) components that automate tasks such as model selection and parameterization.

Each of the three major cloud providers offers an ML platform designed to integrate with its cloud ecosystem: Google Vertex AI, Amazon SageMaker and Microsoft Azure ML. These unified environments offer tools for model development, training and deployment, including AutoML and MLOps capabilities and support for popular frameworks such as TensorFlow and PyTorch. The choice often comes down to which platform integrates best with an organization's existing IT environment.

In addition to the cloud providers' offerings, there are several third-party and open source alternatives. The following are some other popular ML platforms:

- IBM Watson Studio. Offers comprehensive tools for data scientists, application developers and MLOps engineers. It emphasizes AI ethics and transparency and integrates well with IBM Cloud.

- Databricks. A unified analytics platform well suited for big data processing. It offers collaboration features, such as collaborative notebooks, and a managed version of MLflow, a Databricks-developed open source tool for managing the ML lifecycle.

- Snowflake. A cloud-based data platform offering data warehousing and support for ML and data science workloads. It integrates with a wide variety of data tools and ML frameworks.

- DataRobot. A platform for rapid model development, deployment and management that emphasizes AutoML and MLOps. It offers an extensive prebuilt model selection and data preparation tools.

Frameworks and libraries

ML frameworks and libraries provide the building blocks for model development: collections of functions and algorithms that ML engineers can use to design, train and deploy ML models more quickly and efficiently.

In the real world, the terms framework and library are often used somewhat interchangeably. But strictly speaking, a framework is a comprehensive environment with high-level tools and resources for building and managing ML applications, whereas a library is a collection of reusable code for particular ML tasks.

The following are some of the most common ML frameworks and libraries:

- TensorFlow. An open source ML framework originally developed by Google. It is widely used for deep learning, as it offers extensive support for neural networks and large-scale ML.

- PyTorch. An open source ML framework originally developed by Meta. It is known for its flexibility and ease of use and, like TensorFlow, is popular for deep learning models.

- Keras. An open source Python library that acts as an interface for building and training neural networks. It is user-friendly and is often used as a high-level API for TensorFlow and other back ends.

- Scikit-learn. An open source Python library for data analysis and machine learning, also known as sklearn. It is ideal for tasks such as classification, regression and clustering.

- OpenCV. A computer vision library that supports Python, Java and C++. It provides tools for real-time computer vision applications, including image processing, video capture and analysis.

- NLTK. A Python library specialized for NLP tasks. Its features include text processing libraries for classification, tokenization, stemming, tagging and parsing, among others.

Programming languages

In theory, almost any programming language can be used for ML. But in practice, most programmers choose a language for an ML project based on considerations such as the availability of ML-focused code libraries, community support and versatility.

Much of the time, this means Python, the most widely used language in machine learning. Python is simple and readable, making it easy for coding newcomers or developers familiar with other languages to pick up. Python also boasts a wide range of data science and ML libraries and frameworks, including TensorFlow, PyTorch, Keras, scikit-learn, pandas and NumPy.

Other languages used in ML include the following:

- R. Known for its statistical analysis and visualization capabilities, R is widely used in academia and research. It is well suited for data manipulation, statistical modeling and graphical representation.

- Julia. Julia is a less well-known language designed specifically for numerical and scientific computing. It is known for its high performance, particularly when handling mathematical computations and large data sets.

- C++. C++ is an efficient and performant general-purpose language that is often used in production environments. It is valued for its speed and control over system resources, which make it well suited for performance-critical ML applications.

- Scala. The concise, general-purpose language, Scala is often used with big data frameworks such as Apache Spark. Scala combines object-oriented and functional programming paradigms, offering scalable and efficient data processing.

- Java. Like Scala, Java is a good fit for working with big data frameworks. It is a performant, portable and scalable general-purpose language that is commonly found in enterprise environments.

What is the future of machine learning?

Fueled by extensive research from companies, universities and governments around the globe, machine learning continues to evolve rapidly. Breakthroughs in AI and ML occur frequently, rendering accepted practices obsolete almost as soon as they're established. One certainty about the future of machine learning is its continued central role in the 21st century, transforming how work is done and the way we live.

Several emerging trends are shaping the future of ML:

- NLP. Advances in algorithms and infrastructure have led to more fluent conversational AI, more versatile ML models capable of adapting to new tasks and customized language models fine-tuned to business needs. Large language models are becoming more prominent, enabling sophisticated content creation and enhanced human-computer interactions.

- Computer vision. Evolving computer vision capabilities are expected to have a profound effect on many domains. In healthcare, it plays an increasingly important role in diagnosis and monitoring. Environmental science benefits from computer vision models' ability to analyze and monitor wildlife and their habitats. In software engineering, it is a core component of augmented and virtual reality technologies.

- Enterprise technology. Major vendors like Amazon, Google, Microsoft, IBM and OpenAI are racing to sign customers up for AutoML platform services that cover the spectrum of ML activities, including data collection, preparation and classification; model building and training; and application deployment.

- Interpretable ML and XAI. These concepts are gaining traction as organizations attempt to make their ML models more transparent and understandable. Techniques such as LIME, SHAP and interpretable model architectures are increasingly integrated into ML development to ensure that AI systems are not only accurate but also comprehensible and trustworthy.

Amid the enthusiasm, companies face challenges akin to those presented by previous cutting-edge, fast-evolving technologies. These challenges include adapting legacy infrastructure to accommodate ML systems, mitigating bias and other damaging outcomes, and optimizing the use of machine learning to generate profits while minimizing costs. Ethical considerations, data privacy and regulatory compliance are also critical issues that organizations must address as they integrate advanced AI and ML technologies into their operations.

Lev Craig covers AI and machine learning as the site editor for TechTarget Editorial's Enterprise AI site. Craig graduated from Harvard University with a bachelor's degree in English and has previously written about enterprise IT, software development and cybersecurity.

Linda Tucci is an executive industry editor at TechTarget Editorial. A technology writer for 20 years, she focuses on the CIO role, business transformation and AI technologies.

Ed Burns, former executive editor at TechTarget, also contributed to this article.