What is data management and why is it important?

Data management is the process of ingesting, storing, organizing and maintaining the data created and collected by an organization. Effective data management is a crucial piece of deploying the IT systems that run business applications and provide analytical information to help drive operational decision-making and strategic planning by corporate executives, business managers and other end users.

The data management process includes a combination of different functions that collectively aim to make sure the data in corporate systems is accurate, available and accessible. Most of the required work is done by IT and data management teams, but business users typically also participate in some parts of the process to ensure that the data meets their needs and to get them on board with policies governing its use.

This comprehensive guide to data management further explains what it is and provides insight on the individual disciplines it includes, best practices for managing data, challenges that organizations face and the business benefits of a successful data management strategy. You'll also find an overview of data management tools and techniques. Click through the hyperlinks on the page to read more articles about data management trends and get expert advice on managing corporate data.

Importance of data management

Data increasingly is seen as a corporate asset that can be used to make better-informed business decisions, improve marketing campaigns, optimize business operations and reduce costs, all with the goal of increasing revenue and profits. But a lack of proper data management can saddle organizations with incompatible data silos, inconsistent data sets and data quality problems that limit their ability to run business intelligence (BI) and analytics applications -- or, worse, lead to faulty findings.

Data management has also grown in importance as businesses are subjected to an increasing number of regulatory compliance requirements, including data privacy and protection laws such as GDPR and the California Consumer Privacy Act (CCPA). In addition, companies are capturing ever-larger volumes of data and a wider variety of data types -- both hallmarks of the big data systems many have deployed. Without good data management, such environments can become unwieldy and hard to navigate.

Types of data management functions

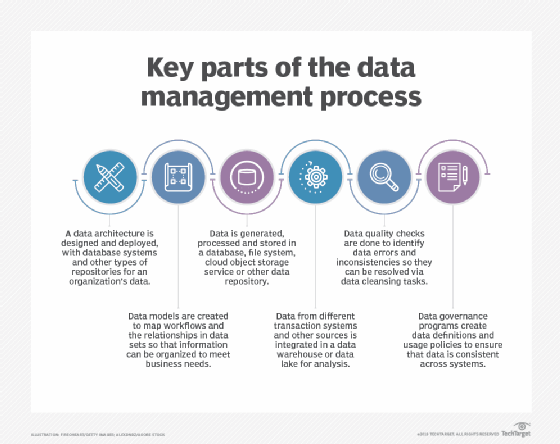

The separate disciplines that are part of the overall data management process cover a series of steps, from data processing and storage to governance of how data is formatted and used in operational and analytical systems. Developing a data architecture is often the first step, particularly in large organizations with lots of data to manage. A data architecture provides a blueprint for managing data and deploying databases and other data platforms, including specific technologies to fit individual applications.

Databases are the most common platform used to hold corporate data. They contain a collection of data that's organized so it can be accessed, updated and managed. They're used in both transaction processing systems that create operational data, such as customer records and sales orders, and data warehouses, which store consolidated data sets from business systems for BI and analytics.

That makes database administration a core data management function. Once databases have been set up, performance monitoring and tuning must be done to maintain acceptable response times on database queries that users run to get information from the data stored in them. Other administrative tasks include database design, configuration, installation and updates; data security; database backup and recovery; and application of software upgrades and security patches.

The primary technology used to deploy and administer databases is a database management system (DBMS), which is software that acts as an interface between the databases it controls and the database administrators (DBAs), end users and applications that access them. Alternative data platforms to databases include file systems and cloud object storage services, which store data in less structured ways than mainstream databases do, offering more flexibility on the types of data that can be stored and how the data is formatted. As a result, though, they aren't a good fit for transactional applications.

Other fundamental data management disciplines include the following:

- data modeling, which diagrams the relationships between data elements and how data flows through systems;

- data integration, which combines data from different data sources for operational and analytical uses;

- data governance, which sets policies and procedures to ensure data is consistent throughout an organization;

- data quality management, which aims to fix data errors and inconsistencies; and

- master data management (MDM), which creates a common set of reference data on things like customers and products.

Data management tools and techniques

A wide range of technologies, tools and techniques can be employed as part of the data management process. The following options are available for different aspects of managing data.

Database management systems. The most prevalent type of DBMS is the relational database management system. Relational databases organize data into tables with rows and columns that contain database records. Related records in different tables can be connected through the use of primary and foreign keys, avoiding the need to create duplicate data entries. Relational databases are built around the SQL programming language and a rigid data model best suited to structured transaction data. That and their support for the ACID transaction properties -- atomicity, consistency, isolation and durability -- have made them the top database choice for transaction processing applications.

However, other types of DBMS technologies have emerged as viable options for different kinds of data workloads. Most are categorized as NoSQL databases, which don't impose rigid requirements on data models and database schemas. As a result, they can store unstructured and semistructured data, such as sensor data, internet clickstream records and network, server and application logs.

There are four main types of NoSQL systems:

- document databases that store data elements in document-like structures;

- key-value databases that pair unique keys and associated values;

- wide-column stores with tables that have a large number of columns; and

- graph databases that connect related data elements in a graph format.

The NoSQL name has become something of a misnomer, though -- while NoSQL databases don't rely on SQL, many now support elements of it and offer some level of ACID compliance.

Additional database and DBMS options include in-memory databases that store data in a server's memory instead of on disk to accelerate I/O performance and columnar databases that are geared to analytics applications. Hierarchical databases that run on mainframes and predate the development of relational and NoSQL systems are also still available for use. Users can deploy databases in on-premises or cloud-based systems. In addition, various database vendors offer managed cloud database services, in which they handle database deployment, configuration and administration for users.

Big data management. NoSQL databases are often used in big data deployments because of their ability to store and manage various data types. Big data environments are also commonly built around open source technologies such as Hadoop, a distributed processing framework with a file system that runs across clusters of commodity servers; its associated HBase database; the Spark processing engine; and the Kafka, Flink and Storm stream processing platforms. Increasingly, big data systems are being deployed in the cloud, using object storage such as Amazon Simple Storage Service (S3).

Data warehouses and data lakes. The two most widely used repositories for managing analytics data are data warehouses and data lakes. A data warehouse -- the more traditional method -- typically is based on a relational or columnar database, and it stores structured data that has been pulled together from different operational systems and prepared for analysis. The primary data warehouse use cases are BI querying and enterprise reporting, which enable business analysts and executives to analyze sales, inventory management and other KPIs.

An enterprise data warehouse includes data from business systems across an organization. In large companies, individual subsidiaries and business units with management autonomy may build their own data warehouses. Data marts are another warehousing option -- they're smaller versions of data warehouses that contain subsets of an organization's data for specific departments or groups of users. In one deployment approach, an existing data warehouse is used to create different data marts; in another, the data marts are built first and then used to populate a data warehouse.

Data lakes, on the other hand, store pools of big data for use in predictive modeling, machine learning and other advanced analytics applications. Initially, they were most commonly built on Hadoop clusters, but S3 and other cloud object storage services are increasingly being used for data lake deployments. They're sometimes also deployed on NoSQL databases, and different platforms can be combined in a distributed data lake environment. The data may be processed for analysis when it's ingested, but a data lake often contains raw data stored as is. In that case, data scientists and other analysts typically do their own data preparation work for specific analytical uses.

A third platform option for storing and processing analytical data has also emerged: the data lakehouse. As its name indicates, it combines elements of data lakes and data warehouses, merging the flexible data storage, scalability and lower cost of a data lake with the querying capabilities and more rigorous data management structure of a data warehouse.

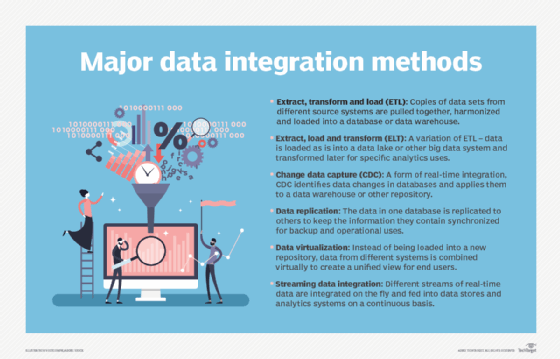

Data integration. The most widely used data integration technique is extract, transform and load (ETL), which pulls data from source systems, converts it into a consistent format and then loads the integrated data into a data warehouse or other target system. However, data integration platforms now also support a variety of other integration methods. That includes extract, load and transform (ELT), a variation on ETL that leaves data in its original form when it's loaded into the target platform. ELT is a common choice for data integration in data lakes and other big data systems.

ETL and ELT are batch integration processes that run at scheduled intervals. Data management teams can also do real-time data integration, using methods such as change data capture, which applies changes to the data in databases to a data warehouse or other repository, and streaming data integration, which integrates streams of real-time data on a continuous basis. Data virtualization is another integration option that uses an abstraction layer to create a virtual view of data from different systems for end users instead of physically loading the data into a data warehouse.

Data modeling. Data modelers create a series of conceptual, logical and physical data models that document data sets and workflows in a visual form and map them to business requirements for transaction processing and analytics. Common techniques for modeling data include the development of entity relationship diagrams, data mappings and schemas in a variety of model types. Data models often must be updated when new data sources are added or when an organization's information needs change.

Data governance, data quality and MDM. Data governance is primarily an organizational process; software products that can help manage data governance programs are available, but they're an optional element. While governance programs may be managed by data management professionals, they usually include a data governance council made up of business executives who collectively make decisions on common data definitions and corporate standards for creating, formatting and using data.

Another key aspect of governance initiatives is data stewardship, which involves overseeing data sets and ensuring that end users comply with the approved data policies. Data steward can be either a full- or part-time position, depending on the size of an organization and the scope of its governance program. Data stewards can also come from both business operations and the IT department; either way, a close knowledge of the data they oversee is normally a prerequisite.

Data governance is closely associated with data quality improvement efforts. Ensuring that data quality levels are high is a key part of effective data governance, and metrics that document improvements in the quality of an organization's data are central to demonstrating the business value of governance programs. Key data quality techniques supported by various software tools include the following:

- data profiling, which scans data sets to identify outlier values that might be errors;

- data cleansing, also known as data scrubbing, which fixes data errors by modifying or deleting bad data; and

- data validation, which checks data against preset quality rules.

Master data management is also affiliated with data governance and data quality management, although MDM hasn't been adopted as widely as they have. That's partly due to the complexity of MDM programs, which mostly limits them to large organizations. MDM creates a central registry of master data for selected data domains -- what's often called a golden record. The master data is stored in an MDM hub, which feeds the data to analytical systems for consistent enterprise reporting and analysis. If desired, the hub can also push updated master data back to source systems.

Data observability is an emerging process that can augment data quality and data governance initiatives by providing a more complete picture of data health in an organization. Adapted from observability practices in IT systems, data observability monitors data pipelines and data sets, identifying issues that need to be addressed. Data observability tools can be used to automate monitoring, alerting and root cause analysis procedures and to plan and prioritize problem-resolution work.

Data management best practices

These are some best practices to help keep the data management process on the right track in an organization.

Make data governance and data quality top priorities. A strong data governance program is a critical component of effective data management strategies, especially in organizations with distributed data environments that include a diverse set of systems. A strong focus on data quality is also a must. In both cases, though, IT and data management teams can't go it alone. Business executives and users must be involved to make sure their data needs are met and data quality problems aren't perpetuated. The same applies to data modeling projects.

Be smart about deploying data management platforms. The multitude of databases and other data platforms that are available to use requires a careful approach when designing an architecture and evaluating and selecting technologies. IT and data managers must be sure the data management systems they implement are fit for the intended purpose and will deliver the data processing capabilities and analytics information required by an organization's business operations.

Be sure you can meet business and user needs, now and in the future. Data environments aren't static -- new data sources are added, existing data sets change and business needs for data evolve. To keep up, data management must be able to adapt to changing requirements. For example, data teams need to work closely with end users in building and updating data pipelines to ensure that they include all of the required data on an ongoing basis. A DataOps process might help -- it's a collaborative approach to developing data systems and pipelines that's derived from a combination of DevOps, Agile software development and lean manufacturing methodologies. DataOps brings together data managers and users to automate workflows, improve communication and accelerate data delivery.

DAMA International, the Data Governance Professionals Organization and other industry groups also offer best-practices guidance and educational resources on data management disciplines. For example, DAMA has published DAMA-DMBOK: Data Management Body of Knowledge, a reference book that attempts to define a standard view of data management functions and methods. Commonly referred to as the DMBOK, it was first published in 2009, and a second edition, the DMBOK2, was released in 2017.

Data management risks and challenges

Ever-increasing data volumes complicate the data management process, especially when a mix of structured, semistructured and unstructured data is involved. Also, if an organization doesn't have a well-designed data architecture, it can end up with siloed systems that are difficult to integrate and manage in a coordinated way. That makes it harder to ensure that data sets are accurate and consistent across all data platforms.

Even in better-planned environments, enabling data scientists and other analysts to find and access relevant data can be a challenge, especially when the data is spread across various databases and big data systems. To help make data more accessible, many data management teams are creating data catalogs that document what's available in systems and typically include business glossaries, metadata-driven data dictionaries and data lineage records.

The accelerating shift to the cloud can ease some aspects of data management work, but it also creates new challenges. For example, migrating to cloud databases can be complicated for organizations that need to move data and processing workloads from existing on-premises systems. Costs are another big issue in the cloud: The use of cloud systems and managed services must be monitored closely to make sure data processing bills don't exceed the budgeted amounts.

Many data management teams are now among the employees who are accountable for protecting corporate data security and limiting potential legal liabilities for data breaches or misuse of data. Data managers need to help ensure compliance with both government and industry regulations on data security, privacy and usage.

That has become a more pressing concern with the passage of GDPR, the European Union's data privacy law that took effect in May 2018, and the CCPA, which was signed into law in 2018 and became effective at the start of 2020. The provisions of CCPA were later expanded by the California Privacy Rights Act, a ballot measure that was approved by the state's voters in November 2020 and took effect on Jan. 1, 2023.

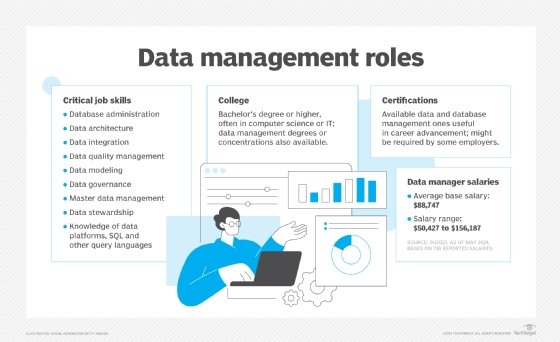

Data management tasks and roles

The data management process involves a wide range of tasks, duties and skills. In smaller organizations with limited resources, individual workers may handle multiple roles. But in larger ones, data management teams commonly include data architects, data modelers, DBAs, database developers, data administrators, data quality analysts and engineers, and ETL developers. Another role that's being seen more often is the data warehouse analyst, who helps manage the data in a data warehouse and builds analytical data models for business users.

Data scientists, other data analysts and data engineers, who help build data pipelines and prepare data for analysis, might also be part of a data management team. In other cases, they're on a separate data science or analytics team. Even then, though, they typically handle some data management tasks themselves, especially in data lakes with raw data that needs to be filtered and prepared for specific analytics uses.

Likewise, application developers sometimes help deploy and manage big data platforms, which require new skills overall compared to relational database systems. As a result, organizations might need to hire new workers or retrain traditional DBAs to meet their big data management needs.

Data governance managers and data stewards qualify as data management professionals, too. But they're usually part of a separate data governance team.

Benefits of good data management

A well-executed data management strategy can benefit organizations in various ways:

- It can help companies gain potential competitive advantages over their business rivals, both by improving operational effectiveness and enabling better decision-making.

- Organizations with well-managed data can become more agile, making it possible to spot market trends and move to take advantage of new business opportunities more quickly.

- Effective data management can also help companies avoid data breaches, data collection missteps and other data security and privacy issues that could damage their reputation, add unexpected costs and put them in legal jeopardy.

- Ultimately, a solid approach to data management can provide better business performance by helping to improve business strategies and processes.

Data management history, evolution and trends

The first flowering of data management was largely driven by IT professionals who focused on solving the problem of garbage in, garbage out in the earliest computers after recognizing that the machines reached false conclusions because they were fed inaccurate or inadequate data. Mainframe-based hierarchical databases became available in the 1960s, bringing more formality to the burgeoning process of managing data.

The relational database emerged in the 1970s and cemented its place at the center of the data management ecosystem during the 1980s. The idea of the data warehouse was conceived late in that decade, and early adopters of the concept began deploying data warehouses in the mid-1990s. By the early 2000s, relational software was a dominant technology, with a virtual lock on database deployments.

But the initial release of Hadoop became available in 2006 and was followed by the Spark processing engine and various other big data technologies. A range of NoSQL databases also started to become available in the same time frame. While relational platforms are still the most widely used data store by far, the rise of big data and NoSQL alternatives and the data lake environments they enable have given organizations a broader set of data management choices. The addition of the data lakehouse concept in 2017 further expanded the options.

But all of those choices have made many data environments more complex. That's spurring the development of new technologies and processes designed to help make them easier to manage. In addition to data observability, they include data fabric, an architectural framework that aims to better unify data assets by automating integration processes and making them reusable, and data mesh, a decentralized architecture that gives data ownership and management responsibilities to individual business domains, with federated governance to agree on organizational standards and policies.

None of those three approaches is widely used yet, though. In its 2022 Hype Cycle report on new data management technologies, consulting firm Gartner said each has been adopted by less than 5% of its target user audience. Gartner predicted that data fabric and data observability are both five to 10 years away from reaching full maturity and mainstream adoption, but it said they could ultimately be very beneficial to users. It was less bullish about data mesh, giving that a "Low" potential benefit rating.

The following are some other notable data management trends:

Cloud data management technologies are becoming pervasive. Gartner has forecasted that cloud databases will account for 50% of overall DBMS revenue in 2022. In the Hype Cycle report, it said organizations are also "moving rapidly" to deploy emerging data management technologies in the cloud. For companies that aren't ready to fully migrate, hybrid cloud architectures that combine cloud and on-premises systems -- for example, hybrid data warehouse environments -- are also an option.

Augmented data management capabilities also aim to help streamline processes. Software vendors are adding augmented functionality for data quality, database management, data integration and data cataloging that uses AI and machine learning technologies to automate repetitive tasks, identify issues and suggest actions.

The growth of edge computing is creating new data management needs. As organizations increasingly use remote sensors and IoT devices to collect and process data as part of edge computing environments, some vendors are also developing edge data management capabilities for endpoint devices.