consumer privacy (customer privacy)

What is consumer privacy (customer privacy)?

Consumer privacy, also known as customer privacy, involves the handling and protection of the sensitive personal information provided by customers in the course of everyday transactions. The internet has evolved into a medium of commerce, making consumer data privacy a growing concern.

This form of information privacy surrounds the privacy and protection of a consumer's personal data when collected by businesses. Businesses implement standards for consumer privacy to conform to local laws and to increase consumer trust, as many consumers care about the privacy of their personal information.

Consumer privacy issues

Personal information, when misused or inadequately protected, can result in identity theft, financial fraud and other crimes that collectively cost people, businesses and governments millions of dollars each year.

Common consumer privacy features offered by corporations and government agencies include the following:

- Do-not-call lists.

- Verification of transactions by email or telephone.

- Technologies for email.

- Passwords and multifactor authentication.

- Encryption and decryption of electronically transmitted data.

- Opt-out provisions in user agreements for bank accounts, utilities, credit cards and other similar services.

- Digital signatures.

- Biometric identification technology.

The popularity of e-commerce and big data in the early 2000s cast consumer data privacy issues in a new light. While the World Wide Web Consortium's Platform for Privacy Preferences Project (P3P) emerged to provide an automated method for internet users to divulge personal information to websites, the widespread gathering of web activity data was largely unregulated. Additionally, P3P was only implemented on a small number of platforms.

Since then, data has taken on a new value for corporations. As a result, almost any interaction with a large corporation -- no matter how passive -- results in the collection of consumer data. This is partially because more data leads to improved online tracking, behavioral profiling and data-driven targeted marketing.

The surplus of valuable data, combined with minimal regulation, increases the chance that sensitive information could be misused or mishandled.

For example, Meta collects a large amount of personal Facebook user data, including how much time users spend on the app, checked-in locations, posted content metadata, messenger contacts and items bought through Marketplace. Meta can then share user data with third-party apps, advertisers and other Meta companies. The collected data is used for targeted advertising. If not properly protected, data leaks can occur -- which happened to Meta in 2018 with the Facebook-Cambridge Analytica leak. Cambridge Analytica used Facebook user data to create voter profiles for political campaigns. The personal data of 87 million Facebook users was consequently leaked.

Laws that protect consumer privacy

Consumer privacy is derived from the idea of personal privacy, which, although not explicitly outlined in the U.S. Constitution, has been put forward as an essential right in several legal decisions. The Ninth Amendment is often used to justify a broad reading of the Bill of Rights to protect personal privacy in ways that aren't specifically outlined but implied.

Despite this, there's currently no comprehensive legal standard for data privacy at the federal level in the U.S. There have been attempts at creating one, but none have been successful.

For example, in 2017, the U.S. government reversed a federal effort to broaden data privacy protection by requiring internet service providers to obtain their customers' consent prior to using their personal data for advertising and marketing. Another comprehensive federal consumer privacy bill, the Consumer Online Privacy Rights Act, was proposed in late 2019. The bill has yet to pass, and many speculate that getting it approved might be a struggle.

Currently, the U.S. relies on a combination of state and federal laws enforced by various independent government agencies, such as the Federal Trade Commission (FTC). These can sometimes lead to incongruities and loopholes in U.S. privacy law since there's no central authority enforcing them.

By contrast, legislation has enforced high standards of data privacy protection in Europe. For example, the European Union (EU) passed the General Data Protection Regulation (GDPR) in 2018, unifying data privacy laws across the EU and updating existing laws to better encompass modern data collection and exchange practices.

The law also had a significant effect on nations outside of Europe -- including the U.S. -- because multinational corporations that serve EU citizens were forced to rewrite their privacy policies to remain in compliance with the new regulation. Companies that didn't comply could incur huge financial penalties. The most notable example is Google, which was fined $57 million under the GDPR in 2019 for failing to adhere to transparency and consent rules in the setup process for Android phones.

The GDPR is touted by many as the first legislation of its kind and has influenced other nations and states within the U.S. to adopt similar regulations. The reason the GDPR is possible for the EU is largely because many European nations have central data privacy authorities to enforce it.

While the U.S. doesn't have a unified data privacy framework, it does have a collection of laws that address data security and consumer privacy in various sectors of industry. Federal laws that are relevant to consumer privacy regulations and data privacy in the U.S. include the following:

- The Privacy Act of 1974 governs the collection and use of information about individuals in federal agencies' systems. It prohibits the disclosure of an individual's records without their written consent, unless the information is shared under one of 12 statutory exceptions.

- The Health Insurance Portability and Accountability Act of 1996 (HIPAA) outlines how protected health information used in the healthcare industry must be handled.

- The Fair Credit Reporting Act of 1970 protects consumer information as it pertains to their credit report, which provides insight into an individual's financial status.

- The Children's Online Privacy Protection Act of 1998 ensures that children under the age of 13 don't share personal information online without the consent of their parents.

- The Financial Modernization Act of 1999 governs how companies that provide financial products and services collect and distribute client information, as well as preventing companies from accessing sensitive information under false pretenses. When defining client confidentiality, this act makes distinctions between a customer and a consumer. A customer must always be notified of privacy practices, whereas a consumer must only be notified under certain conditions.

- The Family Educational Rights and Privacy Act (FERPA) of 1974 protects the privacy of student education records and applies to all schools that receive funding from the U.S. Department of Education.

Many of these federal laws, while providing reasonable privacy protections, are considered by many to be lacking in scope and out of date. However, at the state level, several important data privacy laws have recently been passed, with more pending approval. Because these laws were passed recently, they more adequately protect consumers in a way that applies to current data exchange practices.

The most notable of these state laws is the California Consumer Privacy Act (CCPA), which was signed in 2018 and took effect on Jan. 1, 2020. The law introduces a set of rights that previously hadn't been outlined in any U.S. law. Under the CCPA, consumers have several privileges that a business must honor upon verifiable consumer requests. The law entitles consumers to do the following:

- Know what personal data about them is being collected.

- Know if their personal data is being sold and to whom.

- Say no to the sale of personal information.

- Access their collected personal data.

- Delete data being kept about them.

- Not be penalized or charged for exercising their rights under the CCPA.

- Require a parent or guardian to provide affirmative consent -- opting in -- to the collection of personal data from a child under the age of 13; for children age 13-16, that consent can come from the child.

The law applies to corporations that either have a gross annual revenue of over $25 million per year or collect data on 100,000 or more California residents. Companies that don't comply face sizeable penalties and fines.

The law also only applies to residents of California currently. However, it's expected to set a precedent for other states to take similar action. Several companies have also promised to honor the rights granted under the CCPA for consumers in all 50 states, so as not to have an entirely different privacy policy for Californians. Participating businesses include Microsoft, Netflix, Starbucks and UPS.

The following states are enacting or currently practicing similar laws:

- Vermont. In 2018, the state approved a law that requires data brokers to disclose consumer data collected and grants consumers the right to opt out.

- Nevada. In 2019, the state enacted a law allowing consumers to refuse the sale of their data.

- Maine. The state has enacted legislation that prohibits broadband internet service providers from using, disclosing, selling or allowing access to customer data without explicit consent.

- New York. The state passed a bill known as the New York Privacy Act on June 9, 2023, after its third reading in the New York State Senate. The act is modeled after -- and aims to surpass -- the CCPA.

Critics of these laws worry they will still fall short and create loopholes that could be exploited by data brokers. Also, increased compliance regulations force corporations to adapt to abide, which creates more work, potential bottlenecks and could hinder the development of valuable technology and services. A multitude of unique state laws can also create conflicting compliance requirements and end up creating new problems for consumers and corporations alike. However, privacy advocates view this somewhat concurrent state-level effort as a step toward comprehensive federal legislation in the future.

Agencies that regulate data privacy

The following agencies regulate data privacy in the U.S.:

- The FTC requires companies to disclose their corporate privacy policies to customers. The FTC can take legal action against companies that violate customer privacy policies or compromise their customers' sensitive personal information. It also provides resources for those who want to learn more about privacy policies and best practices, as well as information for victims of privacy-related crimes, such as identity theft. The FTC is currently the most involved agency in regulating and defending data privacy in the U.S.

- The Consumer Financial Protection Bureau protects consumers in the financial sector. It has outlined principles that protect consumers when authorizing a third party to access their financial data and regulates the provision of financial services and products using these principles.

- The U.S. Department of Education administers and enforces the FERPA and aids schools and school districts using best practices for handling student information. Students, especially those paying for secondary education, are consumers of an educational service.

- The Securities and Exchange Commission enforces rules surrounding the disclosure of data breaches and general data protection.

Why consumer privacy protection is necessary

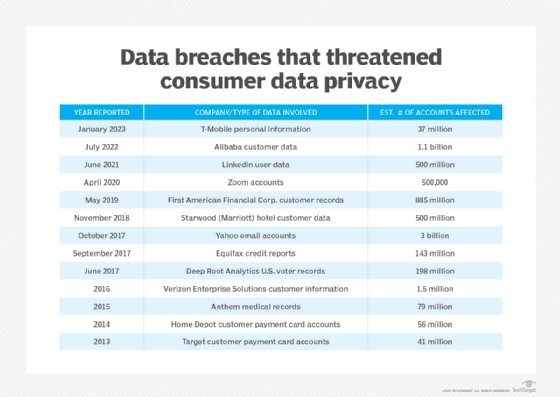

A series of high-profile data breaches in which corporations failed to protect consumer data from internet hacking has drawn attention to shortcomings in personal data protection. Several such events were followed by government fines and forced resignations of corporate officers. In 2017, the litany of customer data breaches included Uber, Yahoo and Equifax, each providing unauthorized access to hundreds of thousands -- if not millions -- of customer records.

Consumer privacy issues have arisen as prominent web companies like Google and Meta moved to the top of business ranks using web browser data to gain revenue. Other companies, including data brokers, cable providers and cellphone manufacturers, have also sought to profit from related data products.

The privacy measures offered to users by these companies are also insufficient, as there's a limit to how much protection a social media user can get by self-regulating their content using an app's privacy settings. This lack of privacy has also affected user trust. A 2022 study from Insider Intelligence found that, on average, 35% of users on different social media platforms felt safe posting on those platforms. Likewise, only 18% of users felt that Meta's Facebook protected their privacy.

Concern for corporate use of consumer data led to the creation of the GDPR to curb data misuse. The regulation requires organizations doing business in the EU to appropriately secure personal data and lets individuals access, correct and even erase their personal data. Such compliance requirements have led to a renewed emphasis on data governance, as well as data protection techniques such as anonymization and masking.

Addressing consumer privacy as a priority is also a good way to increase customer trust. According to a report from the International Association of Privacy Professionals, 64% of consumers expressed an increase in trust for companies that provide a clear explanation of their privacy policies.

Future of consumer privacy

The recent enactment of consumer privacy laws, such as the New York Privacy Act, indicates a heightened concern for consumer privacy among various institutions. As technology advances and internet-connected devices are increasingly used in everyday tasks and transactions, data becomes more detailed and, therefore, becomes more valuable to those that can profit from it. Newer privacy laws and the ending of third-party cookies might help further protect consumer privacy, for example, but companies can still use zero- and first-party data to market content.

As another example, artificial intelligence (AI) and machine learning algorithms often require massive amounts of data to pre-train them, establish patterns and model intelligence. The rapidly growing investment in these data-hungry technologies indicates the likelihood of a sustained interest in data collection for the foreseeable future, and consequently an increased need for consumer privacy policies and frameworks that address new trends in data collection.

One case that exemplifies the way these emerging technologies might continue to stir up privacy concerns in the future is Project Nightingale. Project Nightingale was the name of the partnership between Google and Ascension -- one of the largest healthcare systems in the U.S. In late 2019, Google gained access to over 50 million patient health records through the partnership, with the aim of using the data to create tools that enhance patient care. Google also expressed plans to use emergent medical data in this process, which is nonmedical data that can be turned into sensitive health information using AI.

However, questions remained about the type and amount of information that would be provided to Google, if notice would be given to patients in advance, if patients could opt out, how many Google employees would be given access to health data and how those Google employees would gain approval to access that data.

Although the partnership aimed to help millions, potentially changing the healthcare landscape for the better, there were notable privacy concerns, as Ascension healthcare providers and their patients were unaware that their medical records were being distributed. Some speculate that HIPAA's rules surrounding third-party use of data are out of date, allowing for a concerning lack of transparency in the partnership. Others believe most of the concern surrounding the partnership is misplaced.

Overall, the competing trends of increasingly advanced data collection technology and improved consumer privacy measures and policies are likely to define the future of consumer privacy. Corporations will likely find new data collection methods, and consumers will likely react with an increased expectation of transparency.

Learn more about the strategies companies use to protect customer data.